Eddie Elizondo | Last updated September 2024 | No Hype Analytics blog homepage

Prediction is often one of the first tools used by a data science team: its use cases are widely applicable - demand forecasting, churn forecasting, campaign success prediction, etc. - and every data scientist has learned forecasting. Anyone who has taken basic statistics knows linear regression, too. Forecasting is a familiar topic for everyone. It can even be an easier pitch - with better data and algorithms, we can forecast better than we ever have before. So why do prediction or forcasting models often struggle in practice, and how can we make them better?

While there is truth that forecasting gets better every year, it also belies an unpleasant reality: forecasting in practice is very hard, and over-reliance on it can be a failure mode of AI initiatives. A few words of caution:

- Do not rely on “state-of-the-art” forecasting to solve a problem

- Be wary of AI projects that are heavily dependent on improving a forecast

This doesn’t mean to ignore forecasting - it’s still very important. It’s the reliance on super strong forecasting ability that often pushes AI projects into trouble. A better approach to forecasting AI projects is:

- Secure a good baseline forecast using today’s standard ML toolkit (boosting, regression, ensemble methods, etc.) - good forecasting is still needed to compete today

- Look for ways to obviate the need for a perfect forecast - manage the uncertainty instead of trying to eliminate it - such as aggregating demand, reducing decision lead time, improving data sharing, optimizing placement and size of buffers, etc. Secure a competitive advantage by cleverly managing uncertainty.

There’s heavy assertions above, so let’s look at weather forecasts as an example case study. A huge amount of brainpower and money goes into weather forecasts, so they should show forecasting ability above what most businesses can practically achieve.

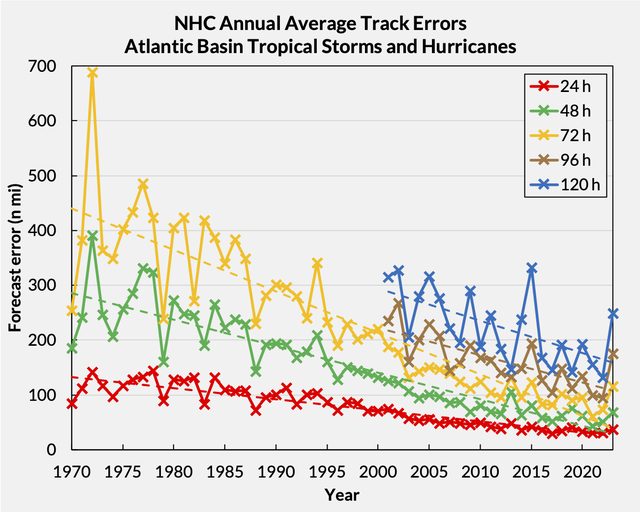

Below is the average annual track error in miles of the US National Hurricane Center (NHC) over time of named storms in the Atlantic. Each solid line represents the error in miles (average for each season) of forecasting the center of circulation’s location with a given lead time. The green line is the error for forecasts made 48 hours in advance, for example. The data is from the NHC website; the only difference is these charts (I also tried to keep them visually similar) include 2023, which is data NHC provided but didn’t update in their charts. The dashed lines are linear trendlines.

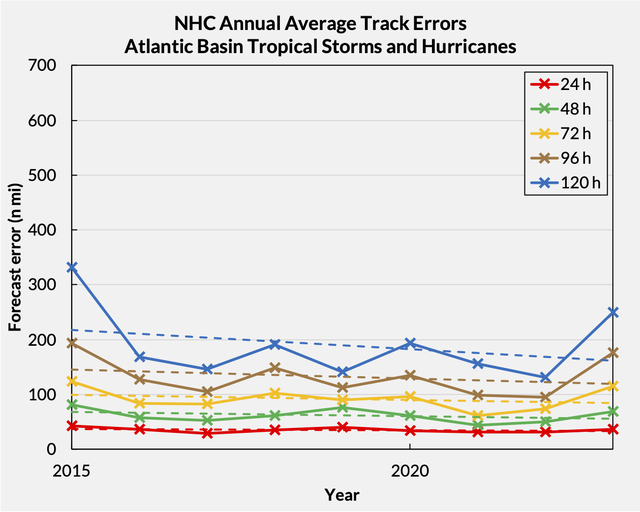

This is a great story - there has been a massive improvement in forecasts since 1970. However, there’s another message if we zoom in on the period since 2015.

Forecast improvement is also flattening, especially at 96h and below - this paints a different picture relevant for us today. (You may question that claim without seeing the full data - scroll down to the Appendix section.) There could be many reasons for that: new atmospheric conditions, effects of global warming, etc. Businesses face headwinds with an analogous set of things that make their forecasting difficult: consumer preferences, macroeconomic tides, competitive dynamics, etc. I see this pattern of forecasting performance broadly. There are big gains from going above a basic level of forecasting (e.g., improve from using prior week’s sales) with the typical ML toolbox, then it’s hard to achieve levels materially beyond that; improvement usually flattens. And improving beyond that becomes very difficult to sustain: time needed to maintain those models expotentially increases, and it may not be worthwhile.

A baseline competency in forecasting is needed to compete, but you won’t win by perfecting a forecast. After putting a baseline forecast in place, think about how to best manage the remaining uncertainty. Often, that baseline competency can be achieved with commoditized tools or standard methods. That means the data science team can focus on unique models for your business, rather than maintaining forecasting models. However, you will need a data science leader who can identify novel areas of modeling value in your business and prioritize the team effectively.

If someone wants to be the anomaly that wins solely with forecasting, remember the weather. And if someone can claim they can really perfect a forecast, I wonder why they are not trading stocks instead.

Appendix

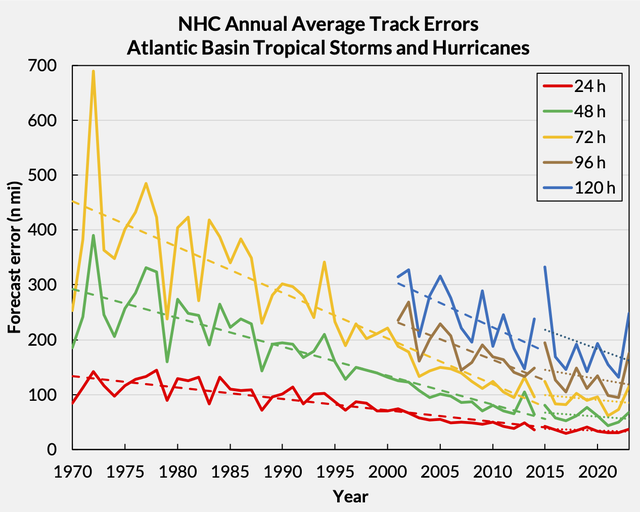

Below is the original NHC graph with two trendlines: 1970-2014 (dashed line) and 2015-2023 (dotted line). There is clear flattening especially in 48h, 72h, and 96h forecasts. 120h is a muddier picture, potentially because of the error spikes on 2015 and 2023. But looking at 2016-2022 could make an even stronger case that the forecast is flattening, so including 2015 and 2023 is a more conservative view that still reaches the same conclusion.

Do you have questions, thoughts, feedback, comments? Please get in touch - I would love to hear from you: eddie@betteroptima.com