Eddie Elizondo | Last updated September 2024 | No Hype Analytics blog homepage

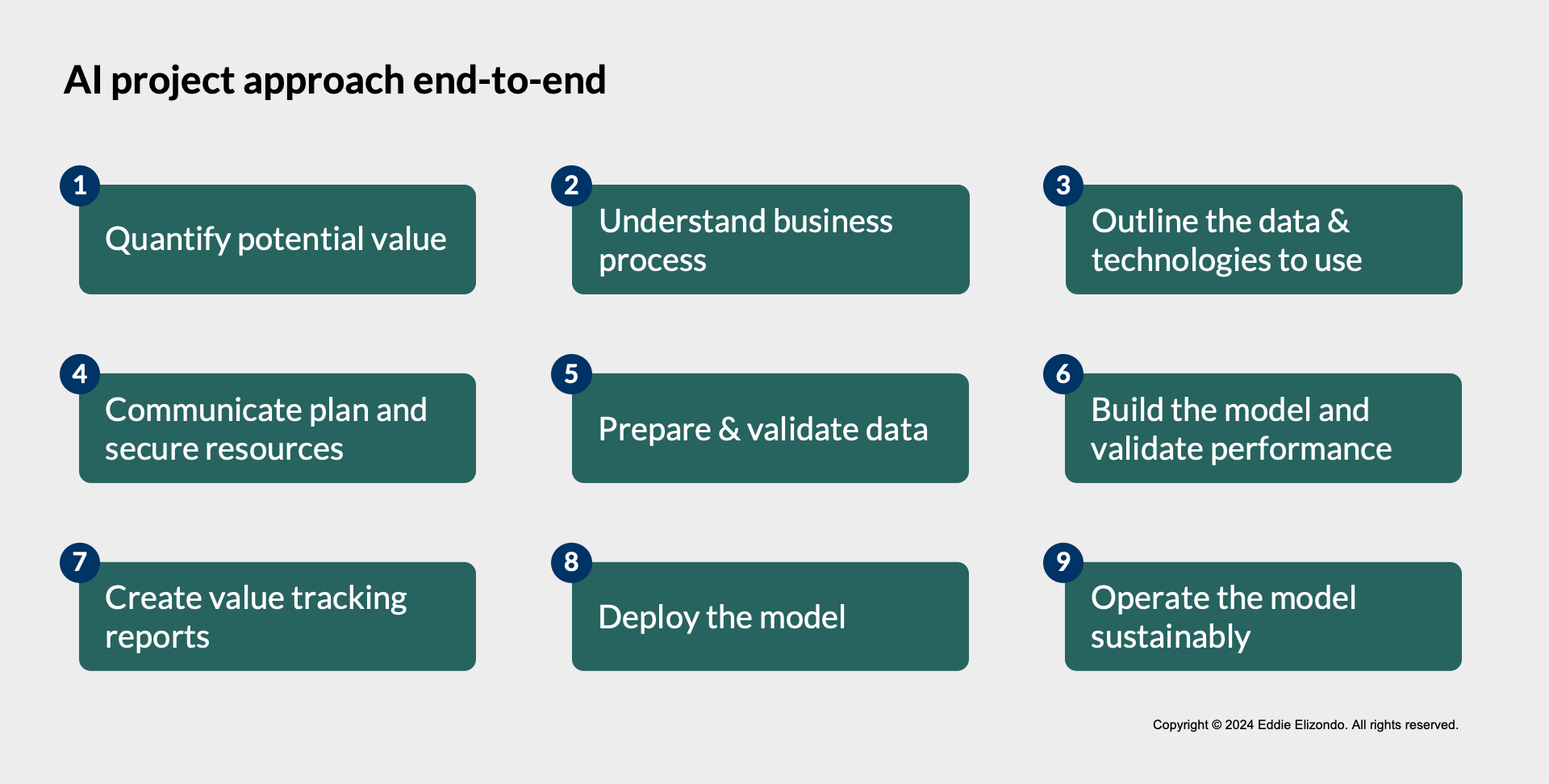

Developing a new AI model (or tool or product) - from idea to successful implementation - is hard. Here is a detailed approach to increase your chance of success. It comes from my years of experience developing AI models of all sizes across industries. I recommend any data science project requiring 2+ weeks of work cover these steps.

There is often not enough emphasis in components that are not technical data science, such as identifying the opportunities to prioritize, stakeholder alignment, data preparation and validation, model validation, and model sustainability. These areare critical to ensure a model is integrated in a business process permanently. I’ll focus heavily on those areas, as they are applicable for any data science project. As always, specific details are best tailored for each project.

1. Quantify the value that will be delivered by the AI model / tool

- Analytics practitioners need to measure the value of their work against executive-level KPIs – revenue, cost, safety, etc. Quantifying the value of a secondary metric like hours of time saved is insufficient.

2. Understand the business process the AI model will be built for

- Develop two business process flows: one for the current process and one for the future-state process after the analytics tool is implemented.

- Business users and data science developers need to be aligned on what the analytics model solves for and how it will be used, before development begins. Deeply understanding the process can also uncover potential “model leakages” where model inputs or outputs may not match what is used or implemented in practice.

3. Outline the data and technologies to use in development, and update throughout the project:

- The outline should contain:

- Data that will be needed – sketch a basic data model to be used

- Models that will be tested – develop an initial list of the techniques and algorithms you will test

- Technologies that will be used – sketch a solution architecture diagram

- Model evaluation methods – define how model performance will be evaluated (e.g., MAPE) for model selection and to ensure a minimum level of performance is achieved

4. Communicate a realistic project plan, especially the timeline required and resources needed

- Explain the needed business and IT resources for model development, testing, and implementation.

- Ensure stakeholders are aligned on the timelines and resources.

5. Prepare data - the “ETL” for the model - and validate both input and output data from this process

- The data preparation step is a common source of issues in many projects as it is highly reliant on experience (it’s not taught or experienced in university), yet often falls on junior team members as a “less technical” task or is skipped due to overly aggressive timelines

- Aim to be a perfectionist: every value of transformed data must be correct and every issue needs to be investigated, as the root cause of the issue can be systemically affecting all data. Remember: incorrect data going into the model can invalidate the entire model.

- Review every data cleaning assumption (e.g., handling zeros, nulls, extreme values) with a business expert.

- Align aggregations with the business calendar (e.g., monthly aggregations are aligned with fiscal months, not calendar months)

- Export a CSV of key outputs from the data prep process and review the data in Excel. Create summary statistics and ensure values make sense with subject matter experts. In addition, check for impossible values, null values, zeros, etc.

- Create data quality test cases that throw warnings or errors - not having data issues today does not mean there will be no issues tomorrow, as the quality of new “raw” data can change.

- Pet peeve: data transformations (assuming structured data) should be done as much as possible within SQL. In particular, do not flip between languages, such as using SQL and Python and back to SQL. SQL is often faster at data transformations and simplifies code and automations later.

6. Build the model, evaluate model performance, and validate the model output with experts from the organization

- As specific steps to build a model depend on the type of model, the focus here will be on model evaluation and validation.

- When measuring model performance, look specifically at performance over time periods that drive the business. Sometimes data science projects look only at model performance over the entire operating time, and the “easier” operating times give a misleading picture – we want to see how the model performs when times are tough! Some examples:

- Forecasting sales for a retail store that gets one weekly replenishment from the warehouse on Wednesday, and orders are due on Monday – Measure forecast error over a week, from Monday to Sunday, with one week lag. Daily error won’t drive performance.

- Building an automated dispatching system for restaurant food delivery – Measure the performance during busy lunch/dinner hours, not mid-afternoon.

- Building an analytics model for a business whose make-or-break quarter is over the winter holiday – Look specifically at performance over the holiday period.

- Aim to “backtest” a model – emulate going back in time to see how the model would perform, and compare against actual outcomes. Work with business experts to understand whether the model makes sense or not, and why. This is a good way to pull model validation from more theoretical measures like error rates to tests that better emulate real-life. During backtesting, also look at difficult-to-operate time periods that would best stress test the model. For more complex models (e.g., prescriptive models), building a simulation to test and improve the model is best practice.

7. Build value tracking reports for the model, easily accessible and understood by anyone in the organization

- Value tracking reports or dashboards should include the KPIs the model should be improving (from step 1) and indicators the model is being actively used, like a recommendation acceptance percentage. The prior backtesting step should help identify metrics that indicate model use.

- Do not delay building at least a basic value tracking report before launch - there needs to be a way from day 1 to show how model is working.

- These reports should not only to track success, but to help identify when model use is falling to proactively discuss why and how it can be remediated.

8. Deploy the model – “deploy” meaning the model being actively used with minimal data science team intervention

- The data science team should work closely with their IT team on deployment expectations and standards. Different organizations have different approaches to eventually deem software as “in production”, and as developers of software, the data science team should work with the IT team how that software is being used.

9. Operate the model sustainably - also called “ModelOps” / “AIOps” / “MLOps”

- Best practice software development should be followed from the start – code versioned controlled on git, test cases written, built in logging, proper development environments, etc. While it’s ok if some of these elements are missing at a project’s earliest stages, they should be in place before testing begins, and certainly before a model is deployed. As writers of code, data scientists should build software engineering skills to make these practices second nature to them.

- There should always be a way to recreate a model’s output for deeper review – through code version control, model stores, tracking of model data inputs and outputs, logging, etc.

- Post-deployment, there must be a plan and mechanisms to ensure model performance does not degrade over time (e.g., model retraining, data refreshes, etc.) There should be dashboards to make model performance transparent, automated alerting of significant issues, automatic mechanisms to ingest new data, etc. This is different from the value tracking dashboards as those are intended for a broader, organization-wide audience.

- Pet peeve: Let’s use ModelOps or AIOps; using MLOps presupposes all AI is machine learning.

Do you have questions, thoughts, feedback, comments? Please get in touch - I would love to hear from you: eddie@betteroptima.com